Previously, I reported on my attempt to use Stable Diffusion.

Dan Luu posted it to Hacker News, and there were some comments. Here are some responses:

Comments suggesting img2img, masking, and AUTOMATIC1111: that’s what I was doing, as I think the images in the previous post make clear.

Comments suggesting that maybe my prompt was no good: I didn’t put in anything like “oneiric”, or “blurry”, or “objects bleeding into each other”, or “please fuck up the edges.” So I don’t think it’s reasonable to blame my prompt for the particular problem that I was complaining about. One commenter suggested that I should have said “pier” instead of “dock”, but actually, judging by Google Images, I was locking for a dock (a place you tie up your boat).

Comments suggesting learning to draw, or that the problem is that I’m not an artist: actually, I am a bit of an artist: I make weird pottery.

I’m just not just not an illustrator. I have dysgraphia, which makes illustration challenging. The example I like to give, when I explain this is: I go to write the letter d, and the letter g comes out. That’s not because I don’t know what the difference is, or because I can’t spell. It’s because somehow my hand doesn’t do what my brain wants. Also, even if I were an illustrator, I would still like to save some time.

The problem isn’t that I can’t figure out the composition of scenes: as the post shows, I was able to adjust that just fine. The problem is that the objects blur into each other.

Others suggested that I could save time by using 3d rendering, as Myst did. This seems preposterous: Myst took a vast amount of time to model and texture. Yes, I would only have to model a place once to generate multiple images. But the modeling itself is a large amount of work. And then making the textures brings me right back to the problem of how to create images when I can’t draw.

Some commenters suggested that in fact I could get away with fewer than 2500 images. On reflection, this might be right. From memory (it’s been a while since I played Myst), the four main puzzle ages each have maybe 20 locations, with an average of, say three look directions per location. Then there’s D’ni (only a few locations), and Myst Island itself, which has roughly one age worth of puzzles per puzzle age. That would be about 500 images. Maybe they are counting all of the little machine bits as separate images? Maybe I am forgetting a whole bunch of interstitial locations? Still, 500 images is also outside of my budget, though less so.

Comments suggesting that it takes a lot of selection: yes, this is after selecting from roughly a dozen images each time. Generating and evaluating hundreds of images would not be a major time savings. If the problem were that I’m particular about composition, it would be reasonable to search through many images to find a good base (and, indeed, that was the part of the test where I was casting the broadest net). The problem is that I’m picky about correctness, and picking through a bunch of images for correctness is slow and frustrating.

Comments suggesting photoshopping afterwards (as opposed to “just draw the approximate color in the approximate place” during img2img refinement): If I could draw, I would just draw.

Comments suggesting many iterations of img2img, photoshopping, back to img2img, etc: 1. Ain’t nobody got time for that. 2. My post shows me trying that: it doesn’t solve my fundamental problem.

Comments suggesting MidJourney: It’s basically a fine-tuning of the Stable Diffusion model, so it’s likely to have the same problems with blurry and incoherent objects. Its house style is pretty fuzzy, which hides some of the incoherence, but for a game like this it’s important to know exactly where you are, and where an object’s edges are. The Colorado State Fair image (which was generated in part by MidJourney) shows some of the incoherence: look at the woman in the center – what’s that stuff on her left side? Part of the lectern? A walking stick held by the third arm coming out of the waist of her dress? Also, trying to do any refinement through a Discord chat seems even more painful than trying to use AUTOMATIC1111, which is already pretty slow and clunky.

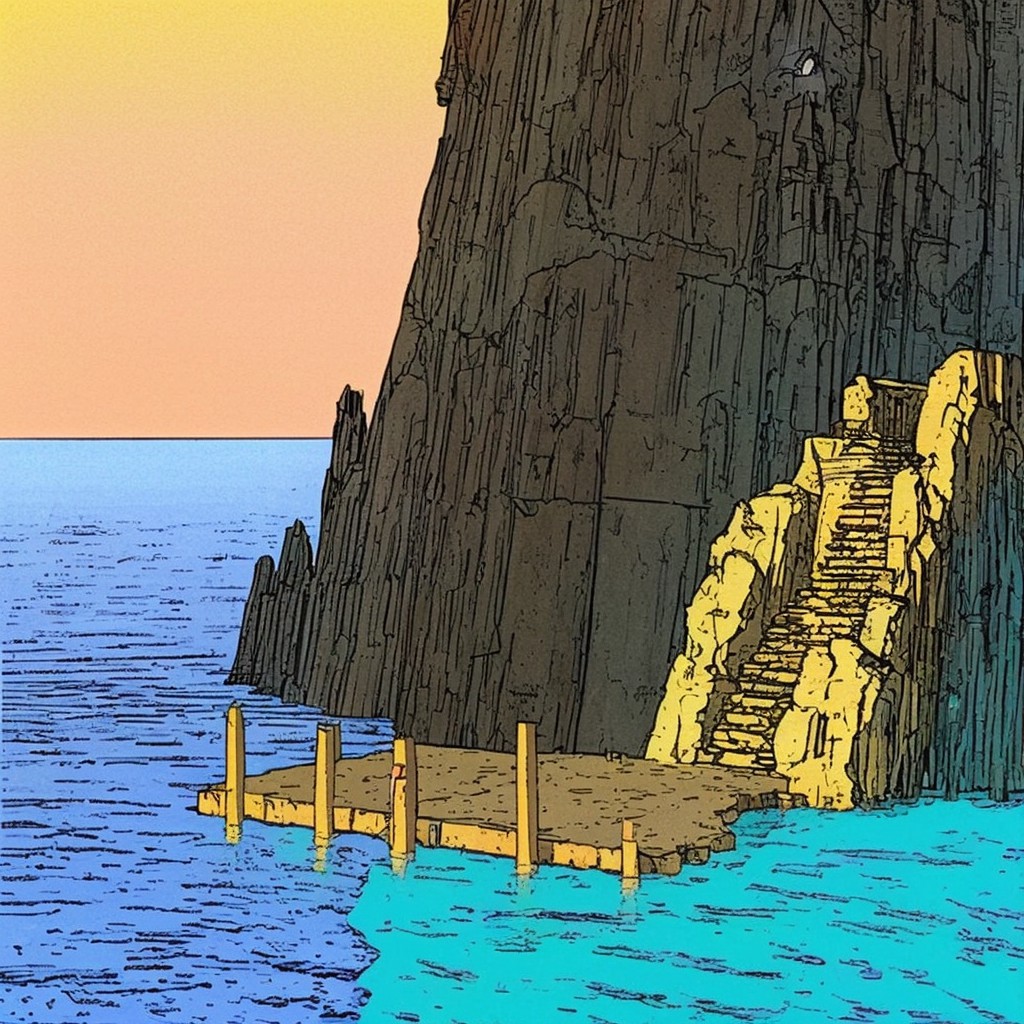

One comment with an improved image: well, it’s a physically plausible object now, if you don’t mind that the dock is now made of stone instead of wood. I wonder why I was unable to get that. The image:

Also, the rightmost post is still sort of fading into the dock at the top. Maybe I was just unlucky? I don’t think the prompt was any better than mine. That gives me hope for the future. Next time a new model comes out, I’ll probably try again.