Here’s an update on my game Middles.

Dominus told me that Bill Gosper was also inspired by …MEOW…, and made a version of this game. His version allows only four-letter substrings, and allows those substrings to occur anywhere in the word (so GULC would be valid). When Dominus mentioned Gosper’s work, I assumed that it was in, like, 1983, since Gosper is fifteen years older than my parents, but it was actually just a couple of years ago. So I’m not that far behind the curve. Update: No, actually I am way late. Dominus further informs me that he learned about it in More Mathematical People(1990), p 114. Gosper attributes it to a friend of his.

A former co-worker mentions Wordiply, a Guardian game with non-unique middles. I hate it, because the longest words are very often a mess of affixes. Consider “utel”: the best word is “absofuckinglutely”, which contains both an infix and a suffix; the best word they are likely to actually accept is is “irresolutely”, which contains both a prefix and a suffix. And they let you riff on affixes, so you can do things like remitting, remittingly, unremittingly; this is often a totally reasonable strategy. There’s nothing wrong with affixes, but there’s also nothing interesting about affixes.

A game that plays somewhat like Middles is Superghost; Jed Hartman describes it nicely; there, the goal is not to make a word. Another friend mentioned it in the context of a James Thurber piece from 1951 (paywalled; your library may have New Yorker archive access). The logical next step is, of course, Superduperghost, which allows inserting letters at any position. Jed Hartman also describes this version; Wikipedia says it wasn’t invented until 1970, which seems surprising.

Hartman also mentions “the occasional several-minute wait between letters”, which points to a problem with this whole category of games: without a time limit, you can often spend hours thinking about a turn. Gil Hova’s Prolix solves this problem with a timer. This is a problem I complain about whenever someone wants to play Codenames. Yes, Codenames comes with a timer, but nobody uses it, and it’s too long anyway. This is one reason I wanted a game like Surfwords.

There are often a lot of ways to approach the same game concept. Today I was at MoMath and discovered Balance Beans. I had definitely considered making a game on this premise, but mine was going to be a computer game, and it was going to involve trucks trying to drive across the balance beam, with some slop in the system to allow a truck to be driven onto just one side. Also, my trucks were going to have different weights at the same one-square size, as opposed to having size and weight conflated and having connected groups. After playing it, I think their choices might be better. But mine would have offered some neat sequencing puzzles. (Just a note: their physical design is a bit crap; if you aren’t careful while removing a piece from the heavy side, the motion will disrupt some of the other pieces).

PS: I’ve also corrected a flaw in Middles. Dominus and another player mentioned that yesterday’s EYAN could be the somewhat obscure word ABEYANCE instead of the expected CONVEYANCE. I’ve added a button for this situation, which lets you enter an alternate answer and (if your alternate answer is on my long word list and you entered a letter that would have been correct for the alternate answer) gives you a point back. This is a weird solution, but I didn’t want to just accept alternate answers as you type them, since that could lead a user to a word that they think is obscure.

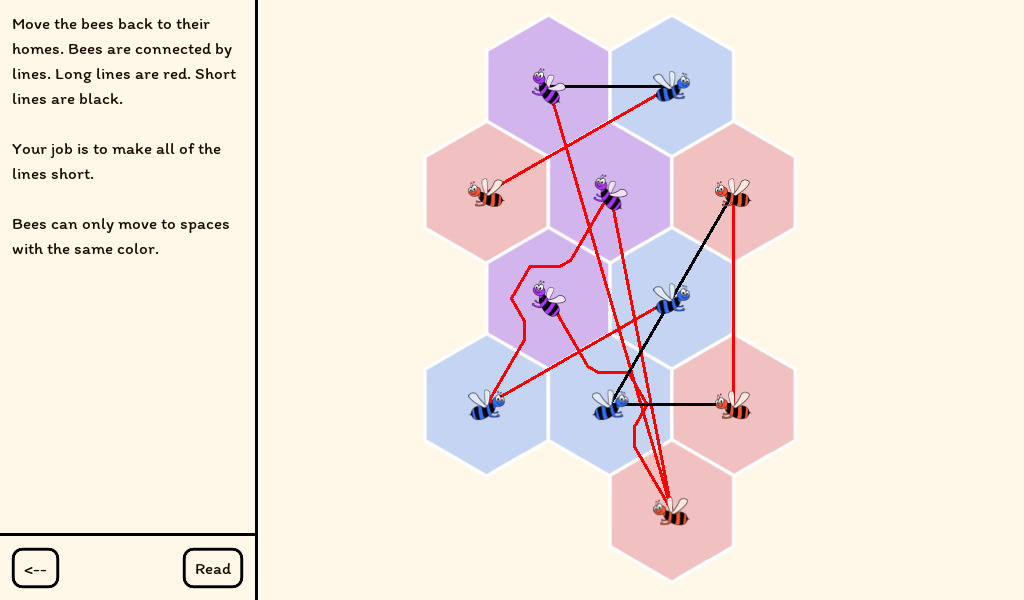

I made Middles, a daily word game.

A while ago, I worked a company whose initials were TS. This led to some fun times: Accounts that started with TS were assumed to be system accounts, so when a human named Tsutomo (or something) joined the company, his account got treated weirdly. IIRC we renamed his account rather than changing the system.

I was working on version control, and we wanted to find a name for the new system, so I suggested “nuTShell”, being the best word I could find that contains the letters “ts” in the middle. My team lead immediately replied, “O God, I could be bounded in a nut shell and count myself a king of infinite space.” Which is exactly what I was tihnking. But nobody else seemed to appreciate the Shakespeare, so we went with a different name.

And then there’s this Tumblr post. (It will probably get lost, so for future reference, it reads: “List of words containing “meow”: meow, meowed, meowing, meows, homeowner”). The original version of that Tumblr post (since deleted) is from 2016; I don’t remember when I saw it. But it’s been living in my head ever since, and eventually, I realized that there was a game there.

The game works like this: I give you the middle of a word, and you try to guess the rest, one letter at a time. Every time you guess wrong, you lose a point. At zero points, you lose. The secret word is chosen so that its middle is unique among commonly-known words (ignoring plural nouns and singular verbs). For instance, the middle OBC appears in the common word BOBCAT and no other common singular word (it also appears in the uncommon word MOBCAP, a kind of bonnet that nobody has heard of).

There are a lot of words that have unique middles that nonetheless are bad candidates. DUSTRIO is bad because it’s obviously INDUSTRIOUS. WJ is bad because it’s NSFW. INGEM is bad because while INFRINGEMENT is the more common word, IMPINGEMENT is common enough that some players will know it. For this determination, I used a combination of Brysbaert, M., et al and my own personal judgement (they think impingement is non-prevalent, but I think it’s prevalent, possibly wrongly, because it’s something I’ve experienced). Also, Brysbaert is lemmatized, which makes life harder.

But I have about 4,000 words (I still might remove some when I take another look), which is over a decade of game before repating. Not quite Wordle, but not bad.

I don’t want to review the whole game. But I do want to talk about one puzzle that I found infuriating. No, not that one, which could so easily have been improved. A different one. Not because it was hard (it wasn’t). But because it had a bizarre design. I’m going to spoil some minor aspects of the puzzle, but not the interesting part.

Here’s the set-up. Actually, here’s the pre-set-up: puzzles in the game are both entirely arbitrary and entirely diegetic: some asshole has stranded you on this puzzle archipelago and is forcing you to solve his puzzles.

OK, now the set-up: You’re on an island. There are two other islands, one to the South and one to the North, and you can’t presently get to either island.

There’s a green laser thing shooting out of a crystal. You can rotate a reflector doohickey to make it point to another crystal on the North island, at which point you can press a button and “ride” the laser across. There’s also a crystal on the South island, but you can’t point to that one because it’s too high up. There are other reflector doohickeys visible but not reachable.

When you get to the North island, you find a machine (actually, two, but they function in tandem), and more reflectors. The machine controls (in a way that I will leave vague) the enablement and orientation of all of the reflectors. You also find a piece of paper which describes a particular set of reflector orientations that it wishes you to achieve.

If you manipulate the machine appropriately, you can make the reflectors assume this orientation (and enable them all). This machine is pretty cool. Not the world’s most innovative puzzle, but (unlike many of Quern’s puzzles), not readily susceptible to brute force. I did not regret the minutes I spent figuring out how to make it do the thing.

Completing the pattern gives you a new way to travel back to the central island. It also makes a lever pop up, which lets you adjust the initial reflector upwards so you can hit the crystal on the South island.

This is stupid.

The path that the new pattern sends you on takes you around the otherwise inaccessible back side of the central island. Instead of having the South island crystal high up, they should have had it obstructed so that it could only be reached from the back. You would still need to do roughly the same reflector manipulation, but instead of doing it because a piece of paper told you to, you would be doing it because it would directly let you reach your goal. There’s no need for the lever, in that case, and no need for the piece of paper. (This also requires either a slight change in the mechanics of crystals, or one more crystal, but neither would break the rest of the game).

This would have a subtly different effect, in that you couldn’t travel directly from the central island to the South island. But that’s not a path you need to take more than once (OK, twice, I didn’t notice something the first time I was there). And anyway, once you’re there, a fast return path could have been provided (Quern does a lot of this).

The reason my design is better is:

It is more interesting to figure out how to use a tool to accomplish your goal than it is to figure out how to input a pattern because a piece of paper told you to.

The lever is an unnecessary piece of hardware that doesn’t contribute to making the puzzle harder or more interesting.

Possibly even better would be to have the reflectors arranged roughly as they currently are, but while you’re riding the laser the long way around the back of the island, you can see something that you would then need to use to decide how to change the routing. I am not sure this idea would actually be better, because I found the laser-riding to be headache-inducing. But at least it would have fully used the possibilities of the set-up.

Maybe you could argue that it’s diegetic that the puzzle has this weird lever epicycle (because the dude who has trapped you here is just not a good puzzle designer), but that is the last refuge of the scoundrel.

Have you noticed the turds?

Before I begin, let me say that I’m not dissing Generated Adventure or the people who created it. They made a very impressive tech demo in 72 hours, and if you don’t look too closely, it’s rather pretty. They were under tight time pressure, and they were specifically aiming to use only AI tools. They did better than I would have in 72 hours (although as a parent, the idea of devoting 72 straight hours to anything is totally unimaginable). It’s good enough to make me consider Deform over Godot for a project like this (I’m sure either could handle it, but they did it so fast!).

But I feel like something that gets lost in the discussion of AI-generated artwork is the turds. Here’s an example of what I mean: it’s one of the sets of images that Midjourney generated for Generated Adventure (I don’t think any of them ended up being used for the game).

At first glance, it’s fine. But then you notice the turds.

Here’s what I mean. Green marks blurry object edges, which I’ve complained about before. Some of these might be the webp compression of the image from the Medium post, so I haven’t been too harsh here. Purple marks bad angles: shadows that don’t match, a wall that’s also a floor, and different-length legs on a … well, I have no idea what that thing is. Blue generally marks things that are unidentifiable, although in the top-right one, it marks the only floor-tree (?) that has a pot. Unidentifiable stuff is a venial sin; it’s a fantasy game, and sometimes there’s weird stuff in a fantasy world.

Red is the real turds. Stuff that is so our-of-place as to break immersion. Often it’s unidentifiable, but sometimes it’s just in the wrong spot (like the faucet in the top left which isn’t over a sink). The bottom left has a fractal turd: the roof of the hutch has a weird asymmetrical fold at the top, but also, a bedroom should not contain a hutch with an outdoor roof.

Once you start looking at AI-generated art, the turds are everywhere. Midjourney often “solves” this by doing images that are impressionistic rather than representational. I googled for “Midjourney dragon”, and this was the first hit:

Other than the inexplicable human figure (?) in the center of the image walking on water, this doesn’t have major turds — but it’s also clearly intended to impressionistic rather than to represent a real scene. I should note that several of the other images from that source do have turds, such as phantom Chinese-looking characters. Side note: I wonder why the dragons are disproportionately looking to the left. In one image, the main dragon head is looking to the right, but clearly that was unacceptable, so there’s also a secondary head-turd looking to the left.

Music

I’m not any good at music, so I can’t immediately identify the musical turds in the AI-generated music from AIVA. This is what Generated Adventure used. AIVA seem to be generating MIDI files, so they won’t have the same sorts of errors as AI image generators, which operate on a pixel level. But I can say that their prompt following is bad. I uploaded the first track of the Surfwords soundtrack to AIVA. Here’s what it sounds like:

That’s a track composed by a human. One of the tracks that I sent him as inspiration was this one (skip to 0:24):

He nailed it! Patrick’s track is maybe a little less skrawnky, but it definitely captures the feeling and instrumentation.

Compare AIVA (I uploaded Patrick’s track rather than the Moon Hooch as a “influence”, since I have the rights to it):

Sure, the BPM is the same (according to a random online BPM detector). Maybe the key signature is also the same? But it’s not even the right instruments — AIVA suggested “Clean Rock Ensemble” for this track, but my track is brass. (AIVA’s generated tracks with the same influence on the Brass Ensemble setting sound even less like the original).

If you don’t have a vision in mind for what your music sounds like, AIVA is maybe fine. Like, it sounds like generic music. But if you do have a vision in mind (as I did for the Surfwords music), AIVA is not there yet.

Conclusion

Please don’t tell me about how I could fix this by twiddling the Midjourney or AIVA prompts.

AIVA’s config options seem to be designed for people who understand music much better than I do. Maybe it’s a good tool if that’s your situation. But why offer to let me upload an influence, if you aren’t going to do anything other than extract the BPM and key signature? At least get the instruments right!

And yes, you can paint out the turds in Midjourney and tell it to fill in the area again until it gets it right. But the shadows are still going to be annoying, and the iteration time is painfully slow.

Soon, this might be fixed. But not today. It’s frustrating to be so close, and to have everyone hyping how close we are, and to be stuck with turds . (Also please don’t say mean things about the Generated Adventure folks, who set out to do a thing and successfully did the thing; none of this is directed at them).

Deco Deck is my fourth indie game. It’s available today on iOS, Android, and Steam.

About the game

Deco Deck is a new kind of logic puzzle. If you like KenKen or Two Not Touch, you might like Deco Deck.

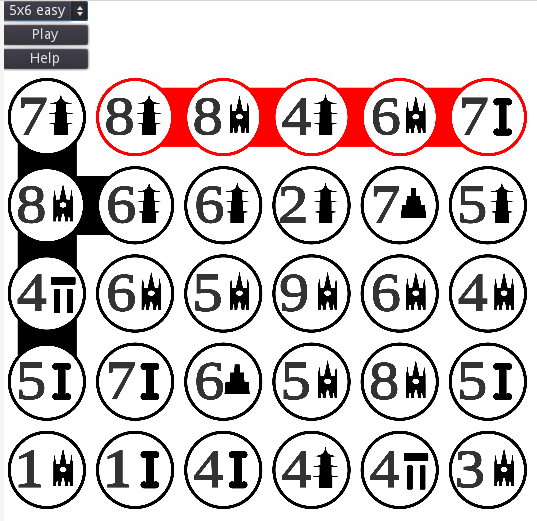

Here’s what a mini puzzle looks like:

Every card has one of five suits, and a number from 1-9.

The goal is to link up the cards in connected groups of five, such that each group forms a valid “hand”. The hands are: straight, flush, full house, five-of-a-kind, and rainbow. A rainbow is one card of each suit.

Each puzzle has only one solution.

To solve, just link up the cards:

Of course, they’re not all that easy. That puzzle is easier than any puzzle in the game itself. It is the trivial puzzle that I use in the tutorial. I chose it so that there’s no way to go down a wrong path. And it’s got the flush of blue hexagons, which the tutorial is able to describe as “blue” (for people with normal vision), or “hexagons” (for colorblind people). The other shapes don’t have short, unambiguous names.

Here’s a harder puzzle:

Both of the above hands, marked in gold, are valid hands — but one of them isn’t part of the actual solution.

Puzzles come in four sizes. The above is a “large”, which is 90 cards. You already saw mini (30); there are also medium (60) and huge (120) puzzles. Of course, it would be possible to make puzzles of nearly any size, but it wouldn’t be reasonable to put them on a phone screen. Even the huge is pushing the limits of finger dexterity on smaller phones.

Design

As I mentioned at the end of my post about Mosaic River, a dilemma in the design of the tile placement subgame put me in mind of logic puzzles. So I built a puzzle generator. The first version just spat out the puzzle in tab-separated value format:

| A3 | A4 | A5 | B6 | C9 |

| D2 | D5 | E1 | C5 | C7 |

| B4 | A2 | A1 | C4 | C7 |

| C7 | A2 | A2 | A9 | D9 |

| B2 | E3 | E2 | B1 | A9 |

| C8 | A8 | D5 | B9 | A9 |

Then I popped it into a spreadsheet and used the coloring functions there to mark the hands. (I guess I could have printed it and used a pencil, but I don’t keep my printer connected). This proved that puzzles were (a) solvable by humans and (b) fun to solve. I immediately sent the uncolored spreadsheet to some friends, with instructions. But then I realized that building a front-end in Godot would be really easy.

OK, not the most beautiful, but it only took me a few hours to bang together. Godot spat out a HTML5 version, and I told my friends to disregard the spreadsheet and try the interactive version. The feedback was positive. One friend noticed that the difficulty range wasn’t very large — later, I’ll explain how I solved that.

I decided to put in some more work to make it prettier:

I recognize that this is not in fact much prettier. I wasn’t really sure what it should look like. Then a friend introduced me to his friend, who happened to be a graphic designer. I hired him to do the visual design. We considered all sorts of themes: Japanese flowers, butterflies, amoebas, and 90s cyberpunk. But I decided that I liked Art Deco best. It’s very much a programmer’s sort of art — produced by the intersections of simple shapes at common angles. Designed to be built by craftspeople rather than artists.

Without Benjamin, Deco Deck would not have looked nearly this good. I tried to make some Deco-style cards, but they ended up visually busy, and not really very Deco (that is, not vertically symmetric). It’s actually a little tricky in 2023 to do Art Deco, because our eyes have been trained by modern styles. So, we expect bezier curves instead of circular arcs, since that’s what’s easy to do in vector editors.

Later, I tried to design the card backs (a visual flourish seen only for an instant during loading), and instead of Art Deco, I ended up with Fart Deco.

So I’m very glad that I had a good designer to work with.

Rainbows

When I first designed the game, I didn’t think of rainbows, because they’re not a standard poker hand. If they were a poker hand, they wouldn’t be a strong one: with this deck of cards, they would lose to a straight. But I got to thinking about how suits were only used for flushes, and what a shame that was, and then I remembered that I had five suits. Once I added rainbows, I found myself hooked. The rainbow’s weakness as a poker hand is its strength as a puzzle component: it increases the number of hands that each card can be part of, making the puzzle harder. This fixed the difficulty range problem that my friend had identified.

Puzzle generation

Building a puzzle generator was straightforward: I started by building a little library that would generate a random pentomino tiling of given dimensions. The simplest representation of such a board during the generation process is a bitmask (which fits in a register for even huge boards, so long as you’re willing to use SSE registers).

Then I implemented the constraint propagation algorithm from Norvig’s Sudoku solver, with the additional wrinkle that it would report if multiple solutions were possible. Instead of marking squares with a list of possible numbers, I marked cards with a list of possible “situated pentominos” that it could be part of. A situated pentomino is a pentomino, possibly rotated and reflected, with a given top-left coordinate. Or, if you prefer, a list of five coordinates, which are orthogonally connected. Propagation consists of two different inferences: (1) if a card is part of only one pentomino, then no different pentominos may contain that card. (2) if all pentominos for two cards share some third card, then the third card can only include pentominos from the set of shared cards. The second of these isn’t strictly necessary, but it does make solving much faster.

So generating a puzzle works like this:

- Tile the board with random pentominos

- Generate a random hand for each pentomino

- Check how many solutions the puzzle has.

- If zero: there’s a bug.

- If more than one: pick a random card which could belong to multiple polyominos; re-randomize the polyomino that it’s supposed to belong to.

- If one: ship it!

Of course, there was a bug in my first version of this — the annoying kind of bug which causes correct results, but too slowly. So I thought, “oh well, I’ll have to reimplement it in C++”. Half way through the reimplementation, I went to sleep, and when I woke up I realized what the bug was. So I threw away the C++.

As I generate puzzles, I also track the maximum depth that the search has to go down to. The theory is that deeper searches correlate with harder puzzles. So does a wider set of initial possibilities — that is, more possible pentomino per card. In theory, I could have tracked the size of the entire search tree, but in my testing, it didn’t seem to make much difference (and the size of the tree depends on the search order, since a lucky search choice can propagate out to eliminate zillions of possibilities). This is one of the two axes on which I rate puzzles.

The other axis is quality. A puzzle that has all of its complexity in one corner is boring. A puzzle that’s mostly one color is ugly (and the solve gets easy once you know the trick). A puzzle that has flushes on the top and straights on the bottom is less good than one that has them intermixed. I have eight quality metrics. A weighted sum of these metrics is the puzzle’s quality. I generated millions of puzzles, and the final game contains only the top few percent of them. (Of course, it would have been possible to change the puzzle generator to incorporate the quality metrics, but by the decide I knew what the quality metrics should look like, I had already generated zillions of puzzles).

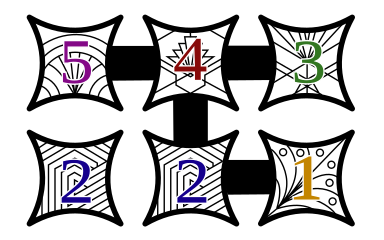

Money

I decided to try a third revenue model. I was inspired here by [Knotwords](https://playknotwords.com/: daily free puzzles, and a subscription for addicts. Like the New York Times Crossword, and like Knotwords, Deco Deck puzzles are easy on Monday and get harder as the week goes on. Unlike Knotwords, Deco Deck puzzles don’t get bigger as the week goes on. The mini is always free, and each day, one of the other three sizes is free; I loop through them. So, on day 1 there’s a free medium, on day 2 there’s a free large, on day 3 a huge, and on day 4 back to a large. So free users get to experience all of the sizes at all of the difficulty levels over the course of three weeks.

The Steam version is just a straight up one-time payment. I didn’t feel like dealing with a whole other in-app purchasing system.

I do all of the puzzles very day (although I do sometimes use a hint on the large or huge Saturday or Sunday puzzles). I’m totally hooked, and I hope you’ll get hooked too.

Development

This one took much longer than expected. When I started this project, I told my wife that I would aim for four or five games in my first year. I’m barely hitting three. One reason is that my kid’s preschool unexpectedly shut down for a month, leaving me with extra childcare duties.

Also, getting the visual design right took a long time. One minor example: on the Monthly puzzles screen, it needs to be clear that you can scroll within each difficulty level. So the layout needs to be tweaked so that, regardless of screen size, a puzzle is half-exposed on the right.

There are also two exciting new developments in my practice: First, I discovered Post Horn PR, a group that does PR for indie games. So as this blog post goes out, a press release will also hit hundreds of game journalists’ inboxes. I’m grateful to them for their help.

Second, I was playing Deco Deck on the train while taking my kid somewhere, and she wanted to try it out. She is four, so she doesn’t fully get what’s going on. But she can enjoy the sensation of linking and unlinking the numbers, and watching them turn colors. And as I suggest connections, she is learning: now she knows what a flush is. I think she especially loves Deco Deck because it’s something her dad made.

Most of the other tablet games that we’ve found for her are, frankly, crappy. There’s the awful critter one which really wants you to spend money on one or more of their in-game currencies. There’s the one that’s supposed to teach math, where getting the question wrong offers a more fun animation than getting itt right. Also, one of its subgames is supposed to teach something about even numbers or division, but actually just teaches trial-and-error. Oh, and it had a gratuitous Christmas thing. I’m an evangelical atheist. I don’t need my kid being proselytized to. Can’t we have math without religion? (I wouldn’t object to the existence of an explicitly Christian math game, but I also wouldn’t get it for my kid).

Oh, and then there’s the one that’s supposed to teach reading, where after every lesson, no matter how badly you do, you get a sticker which you then have to place in your sticker book. Sticker book? Who needs a fucking sticker book? Reading is the key that opens the world of books! You don’t need a sticker: your reward is that you accomplished the task. You learned that C makes the sound at the front of “cat” (it’s always “cat”. Always.) Also, it doesn’t do voice recognition, so it can’t possibly teach reading, and also also some of its early tasks are timed, which makes no sense at all for a tiny kid first learning a skill.

And there’s another that has a bunch of different subgames, but one is so much more fun than the others that they have to gate it: you have to play a bunch of the less-fun games before they let you have one go at the fun one. Their actual reason is probably that the fun one is this: you draw on an egg, and then the egg hatches into a critter with your drawing mapped onto its skin. Then they have to store that critter and display in your menagerie, and they don’t want to use up all of your storage space. But that’s not the right solution: the right solution would be to have a hard limit on the number of critters. Then either allow re-coloring an existing critter, or allow you to send excess critters to a farm upstate.

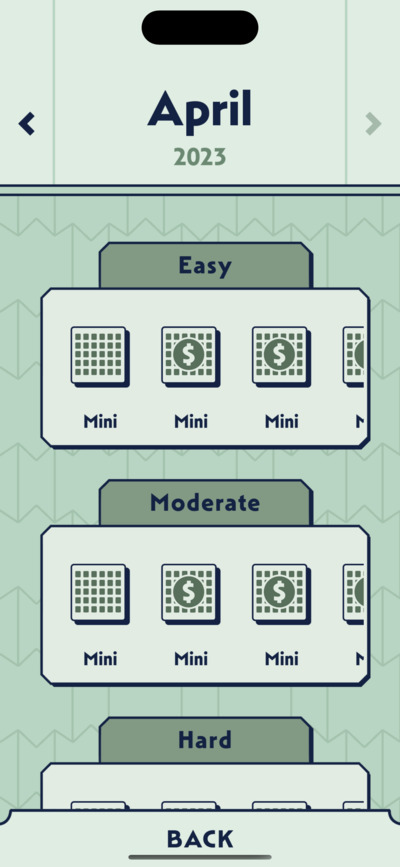

So I am going to make a game that I want my kid to play. It’ll be between ten and twenty small games or other activities. They will mostly not be explicitly educational — no arithmetic worksheets or anything like that. But they will require cognitive skills. They will have intrinsic rewards: yes, there’s a fanfare sound when you solve one, but the real reward is that you did the thing. You accomplished something hard. So far, I’ve got prototypes of four of the games: a working memory game (the game gives you a sequence, you repeat it), an inductive logic game, a game where you move a chess knight, and a graph isomorphism game. What’s a graph isomorphism game?

I’m glad you asked. My original idea was that you would have two graphs, and you would have to prove that they were isomorphic by rearranging the nodes in one to match the other. (I had this idea because I was thinking about how the aspect ratio on modern phones is roughly 2:1 and I wondered what I could do with two squares). But when I showed the original concept to a friend, he didn’t really get it. And then I realized that I could just do direct manipulation, and that the concept was simple enough for a four year old.

One nice thing about building a game for my kid is that I have a tester right there, and she is very excited to try things out. So I get immediate feedback on what’s working. And also unsolicited advice: everything in the game has to be bugs, because once she saw the bees, she got excited.

Intrinsic (working title) is coming probably in late 2023 or early 2024. Tablets and desktop only.

Dominus wrote about games played by aliens. He believes (and I agree) that aliens play Go, and something like poker. And he wonders at the paucity of auction games (I should note that they are a bit more common among hobby board games: Reiner Knizia has five, and Dan Luu was just telling me about Fresh Fish, which I’m eager to try).

Before I even go into some other game mechanics, I want to digress a bit about poker.

Everyone knows that poker is pointless unless played for real money. This is because the right thing in poker is to fold most of the time, but folding is boring. The only thing that will convince you to play right is the prospect of losing money. But what if there’s another way? Imagine a game that plays exactly like Texas Hold’em (no-rebuys and tournament-style, meaning that everyone starts with the same amount of chips), but with the following change: when you win a hand, the chips you win get turned into victory points and added to your VP total. You can’t use them as chips again. Now, if you go all-in, you will not play another hand even if you win. But maybe you will win enough VP to wind up the eventual game winner. This game still sucks by modern standards, because it involves player elimination, but I wonder if it could be the basis of a more reasonable game. You could fix the player elimination by giving everyone a few chips at the start of every hand, but then you need some way to end the game. Maybe the first one to 500 points wins?

Ok, end digression. On to some infrequently-used game mechanics.

Perception

Some time ago, I invented a game where you try to determine physical quantities. Like, you’re given a box full of weights, and you have to determine how many grams it is, just by hefting it. Or you’re given a rod and asked to figure out how long it is (probably no touching or measuring against your hands allowed). You could do temperature (but only within a narrow range, and it would be tricky to implement), luminous intensity, and time too. My friend Jay suggested absolute pitch, which I hate because I am totally non-musical, but it clearly fits the game.

One of my favorite experiments involves pitch (in some sense): it turns out that the frequency of the tingle of Sichuan peppercorns is 50Hz.

One of the few perception games I know of is played in Ethiopia. A friend of mine went there to design games for girls. She said that the girls never wanted to use randomness in their games. To select a first player or whatever, they would instead hide three stones in one hand and four in another, and ask another player to figure out which hand had more stones. Of course, this is also a deception game.

Short-term memory

Many card games that involve counting use the short-term memory. But I’m imagining something where the memory is explicitly the mechanic. Like Simon, but multiplayer.

Watch a chimp kick our asses at a short-term memory game.

Pain tolerance

Dominus suggests a version of chess where you can make your opponent take a move back by chopping off one of your fingers. This is rather permanent (at least for humans). But what about temporary pain?

In Philip K. Dick’s Eye In The Sky, a character sticks his hand over a cigarette lighter to prove how holy he is.

“Ordeal by fire,” Brady explained, igniting the lighter. A flash of yellow flame glowed, “Show your spirit. Show you’re a man.”

“I’m a man,” Hamilton said angrily, but I”ll be damned if I’m going to stick my thumb into that flame just so you lunatics can have your frat-boy ritualistic initiation. I thought I got out of this when I left college.”

Each technician extended his thumb. Methodically, Brady held the lighter under one thumb after another. No thumb was even slightly singed.

“You’re next,” Brady said sanctimoniously. “Be a man, Hamilton. remember you’re not a wallowing beast.”

This is, of course, not about pain — the technicians really are being protected by God (or, what passes for God in the reality that Hamilton finds himself stuck in). But it gets the idea across: this is a game that people could play, if only we weren’t such wimps.

If you wanted to make a safe version of the cigarette lighter game, you could use the thermal grill illusion. I would actually buy a thermal grill illusion device if someone made a commercial version.

The World Sauna Championships were, I guess, a version of this. One competitor described it as “quite possibly the world’s dumbest sport”. As you can probably predict, someone died and they canceled the event.

Robert Yang made a game about a video of men hurting each other. Competitively? Definitely in some sense. Hard Lads is among the safest-for-work of Yang’s games, but I still would not watch it in any office I have ever worked in. I don’t claim Yang’s game itself is an instance of pain as a game mechanic — but rather that it might portray such a game.

Conclusion

I mostly just wanted an excuse to quote PKD and link to Robert Yang and the Sichuan peppercorn thing.

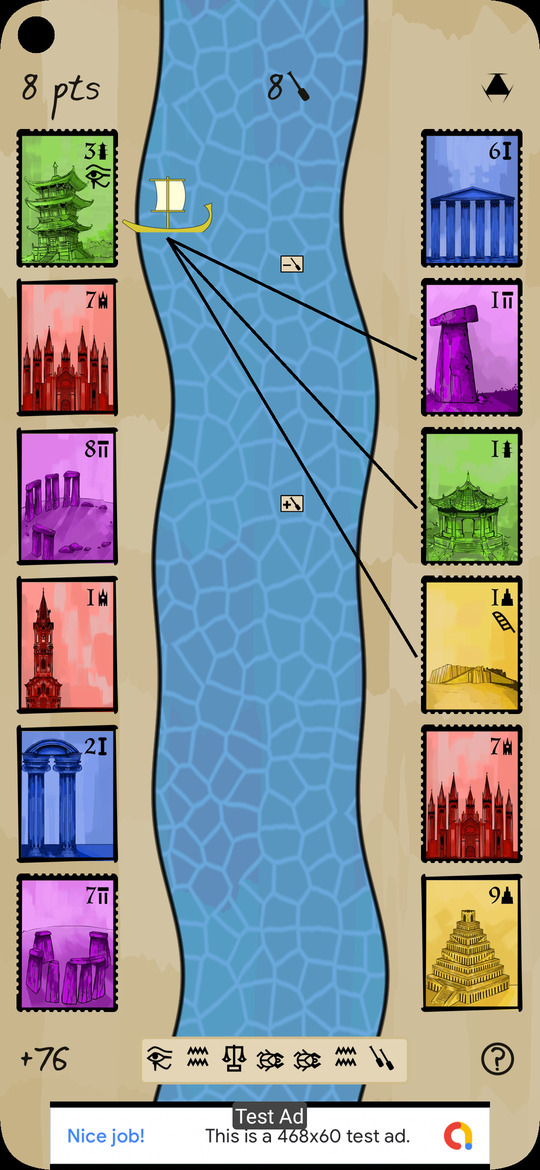

Game overview

I just released a new game!

Mosaic River is a mobile solitaire game. You’re an archaeologist, sailing down a river, collecting mosaic tiles, and placing them into a triangular grid to make melds (straights, flushes, etc). Tiles have bonuses randomly assigned at each game.

You can try it now: it’s free (ad-supported) on iOS and Android.

It’s perhaps easiest to explain by describing some of the influences.

Influences

The first is Uwe Rosenberg’s Patchwork, one of the finest board games ever created. In Patchwork, the acting player can choose any of the three tiles in front of a marker, and choosing a tile advances the marker to that position. This means sometimes you might want to take a suboptimal piece to skip over a piece that would be really good for your opponent. In Mosaic River, you draft by sailing across the river and choosing one of the next three tiles. Choosing a nearer tile will allow you to collect more tiles overall, but it has a cost. Choosing a further tile might mean that you skip tiles you want, but in return you gain more optionality later (or, at worst, a few extra points).

Another influence is Diffusion-limited aggregation (DLA). In DLA, particles move around randomly and then connect. You end up with these tree structures. This influenced the design of Mosaic River’s connectivity rules. Half of tiles are “center” tiles, which need to be next to two other tiles, and other half are “edge” tiles, which need to be next to only one. The idea is to create a similar bushy structure. But since you only end up taking a small subset (perhaps a third) of the available tiles, and because center tiles want to connect in multiple ways in a compact cluster, we need to give a little bonus to edge tiles to get the desired shape.

Of course, Lost Cities is also an archaeology-themed card game with five suits, but it wasn’t consciously an influence. It’s just that every kid dreams of being Howard Carter or Indiana Jones. I’ve been listening to the History Of Egypt podcast too, although I think I am likely to give up, since there’s often proportionally more focus on the pagentry of Egyptian religious celebrations than on the narrative than I would prefer.

Ads

I’ve decided to try a different revenue model with Mosaic River than with Surfwords. I’m doing ads. This sucks, and I hate it, but since Surfwords isn’t selling, I’m going to try something different.

It was pretty easy to get AdMob working with Godot, but the plugin is a little dumb: it doesn’t seem to understand that you need different admob ids for Android and iOS but that you otherwise want (usually) the same codebase for each. (Actually, looking at the config, maybe it does understand this, and I’m just doing something wrong.)

I guess we’ll see how ads perform financially. My next game (see below) will try the freemium model and maybe that will do better.

Challenges

Scaling

As with Surfwords, getting graphical elements to scale nicely to different screen sizes is a hassle. I ended up having to adjust the dimensions of the mosaic to accommodate older (wider) iPhones. It looks like phone manufacturers are starting to standardize somewhat on very skinny ratios, roughly 2:1, but older iPhones and Android phones still exist in the world and must be usable. The long skinny shape is actually somewhat restrictive as a game developer — as a random example, on some modern phones, anything circular can take up at most 35% of the screen (for a 4:3 screen, it’s 58%).

As usual, Godot’s layout model is not as user-friendly as one would hope. It even makes me miss CSS, which is saying something.

Google’s Kafkaesque process

Google refused to approve my app, because it was crashing on startup. Weirdly, this didn’t occur during their pre-launch checks. During pre-launch, crashes are reported with logs. During approval, you can’t get logs. This makes no sense. I uploaded a new version with some random added logging, and of course the crash went away. But please let me know if you run into a crash, and, if you can, send me an adb logcat.

Godot-Admob bugs; Apple bugs

edit

Of course, as soon as I released, someone pointed me to an issue that didn’t exist on any of the devices I tested on (and that Apple somehow totally failed to flag — so much for the supposed quality review). Then when I went to upload a hacked-together fix, I ran into a random Apple bug that Apple, of course, didn’t report on their system status page. It eventually fixed itself. The fixed version is now released.

end edit

Art direction

It turns out that I do not enjoy art direction. This is about me: I don’t want to tell an artist that I don’t like their work, and that they have to do it over again. I think I would feel differently if an artist were a true partner in the project, instead of a hired gun.

I’m working with a designer on my next game (see below). This will, I hope, be a different experience, since instead of requesting spot art, I’m asking someone to work out the entire visual design of the game. It’s still a contractual relationship with me taking all of the risk, but it feels like a different story, since it’s not someone working to a specification, but rather someone creating their own visual story.

Shader performance

I used Material Maker to create the two main backgrounds of my game. This is a model that I really like: you plug some nodes together, maybe write some light math, and out comes an image at whatever scale you like. But it’s also somewhat opaque: when it performs badly, you have to go into a bunch of generated code to figure out what’s going on.

The marble-ish mosaic background ended up just baked into an image. Easy, and performant. The river background was way more complicated, since I wanted something animated and not obviously repeating. The problem is that phones have very crappy GPUs, so doing anything at all complicated blows the framerate budget out of the water. I didn’t really expect this, since the game does not have a ton of visual effects. So maybe I’m doing something wrong, or maybe Godot is. Or maybe GPUs are really good at slinging a lot of pixels around but not so good at generative stuff.

I definitely noticed that Material Maker does not have the ability to optimize one common case. And there was another missing feature too, which I just hacked around in the end.

Scoring and bonuses

I redesigned the scoring several times. While working on the code to compute straights, I noticed that a single tile could be part of multiple straights. This meant that the most optimal strategy was to ignore all other melds, and just try to maximize the number of straights. I fixed this by adjusting the scoring: I made straights score linearly rather than quadratically in length, and made them slightly lower scoring on average than flushes or n-of-a-kind.

Deciding which tiles got bonuses was also a little tricky too. Initially, I randomly allocated bonuses. But this didn’t work for “meld bonuses”. Meld bonuses are a kind of bonus which stick to the tiles. They are represented by scarabs, feathers, and eyes. If you have all three tiles with scarabs (say) directly adjacent, you get a lot of points. But you can’t do that if all three bonuses end up on edge tiles. So then I moved the bonuses so that they were just on center tiles. But you could still end up in a bad situation: if two identical meld bonuses end up directly across the river from each other: you can’t (except with another bonus which you might not have gotten yet) collect both. This felt unfair, and thus unfun.

I thought of a number of complicated ways to allocate bonuses to try to avoid this, but none of them seemed likely to work. So I decided to just reshuffle the deck until that situation was avoided.

Discovering America

While I was thinking about how to score intersecting straights, I considered a rule that a tile could only count for one meld. But then the melds would have be chosen carefully. There were three unpalatable choices:

The player could choose the melds at the end of the game (creating a tedious task right when the user wants the reward of seeing their final score).

The player could choose them during the game (interrupting flow and forcing them to make choices that they would likely regret).

The game could consider all of the possbilities and choose the best (creating an opaque scoring system).

I decided to do none of these, and to just allow intersecting straights. But I realized that, on its own, the decision problem was actually interesting — it just didn’t fit with the vibe of Mosaic River. So my next game will be about deciding how tiles should be arranged into melds. I’ve tried it out, and I’m really, really excited about it. Watch this space for news!

Previously, I reported on my attempt to use Stable Diffusion.

Dan Luu posted it to Hacker News, and there were some comments. Here are some responses:

Comments suggesting img2img, masking, and AUTOMATIC1111: that’s what I was doing, as I think the images in the previous post make clear.

Comments suggesting that maybe my prompt was no good: I didn’t put in anything like “oneiric”, or “blurry”, or “objects bleeding into each other”, or “please fuck up the edges.” So I don’t think it’s reasonable to blame my prompt for the particular problem that I was complaining about. One commenter suggested that I should have said “pier” instead of “dock”, but actually, judging by Google Images, I was locking for a dock (a place you tie up your boat).

Comments suggesting learning to draw, or that the problem is that I’m not an artist: actually, I am a bit of an artist: I make weird pottery.

I’m just not just not an illustrator. I have dysgraphia, which makes illustration challenging. The example I like to give, when I explain this is: I go to write the letter d, and the letter g comes out. That’s not because I don’t know what the difference is, or because I can’t spell. It’s because somehow my hand doesn’t do what my brain wants. Also, even if I were an illustrator, I would still like to save some time.

The problem isn’t that I can’t figure out the composition of scenes: as the post shows, I was able to adjust that just fine. The problem is that the objects blur into each other.

Others suggested that I could save time by using 3d rendering, as Myst did. This seems preposterous: Myst took a vast amount of time to model and texture. Yes, I would only have to model a place once to generate multiple images. But the modeling itself is a large amount of work. And then making the textures brings me right back to the problem of how to create images when I can’t draw.

Some commenters suggested that in fact I could get away with fewer than 2500 images. On reflection, this might be right. From memory (it’s been a while since I played Myst), the four main puzzle ages each have maybe 20 locations, with an average of, say three look directions per location. Then there’s D’ni (only a few locations), and Myst Island itself, which has roughly one age worth of puzzles per puzzle age. That would be about 500 images. Maybe they are counting all of the little machine bits as separate images? Maybe I am forgetting a whole bunch of interstitial locations? Still, 500 images is also outside of my budget, though less so.

Comments suggesting that it takes a lot of selection: yes, this is after selecting from roughly a dozen images each time. Generating and evaluating hundreds of images would not be a major time savings. If the problem were that I’m particular about composition, it would be reasonable to search through many images to find a good base (and, indeed, that was the part of the test where I was casting the broadest net). The problem is that I’m picky about correctness, and picking through a bunch of images for correctness is slow and frustrating.

Comments suggesting photoshopping afterwards (as opposed to “just draw the approximate color in the approximate place” during img2img refinement): If I could draw, I would just draw.

Comments suggesting many iterations of img2img, photoshopping, back to img2img, etc: 1. Ain’t nobody got time for that. 2. My post shows me trying that: it doesn’t solve my fundamental problem.

Comments suggesting MidJourney: It’s basically a fine-tuning of the Stable Diffusion model, so it’s likely to have the same problems with blurry and incoherent objects. Its house style is pretty fuzzy, which hides some of the incoherence, but for a game like this it’s important to know exactly where you are, and where an object’s edges are. The Colorado State Fair image (which was generated in part by MidJourney) shows some of the incoherence: look at the woman in the center — what’s that stuff on her left side? Part of the lectern? A walking stick held by the third arm coming out of the waist of her dress? Also, trying to do any refinement through a Discord chat seems even more painful than trying to use AUTOMATIC1111, which is already pretty slow and clunky.

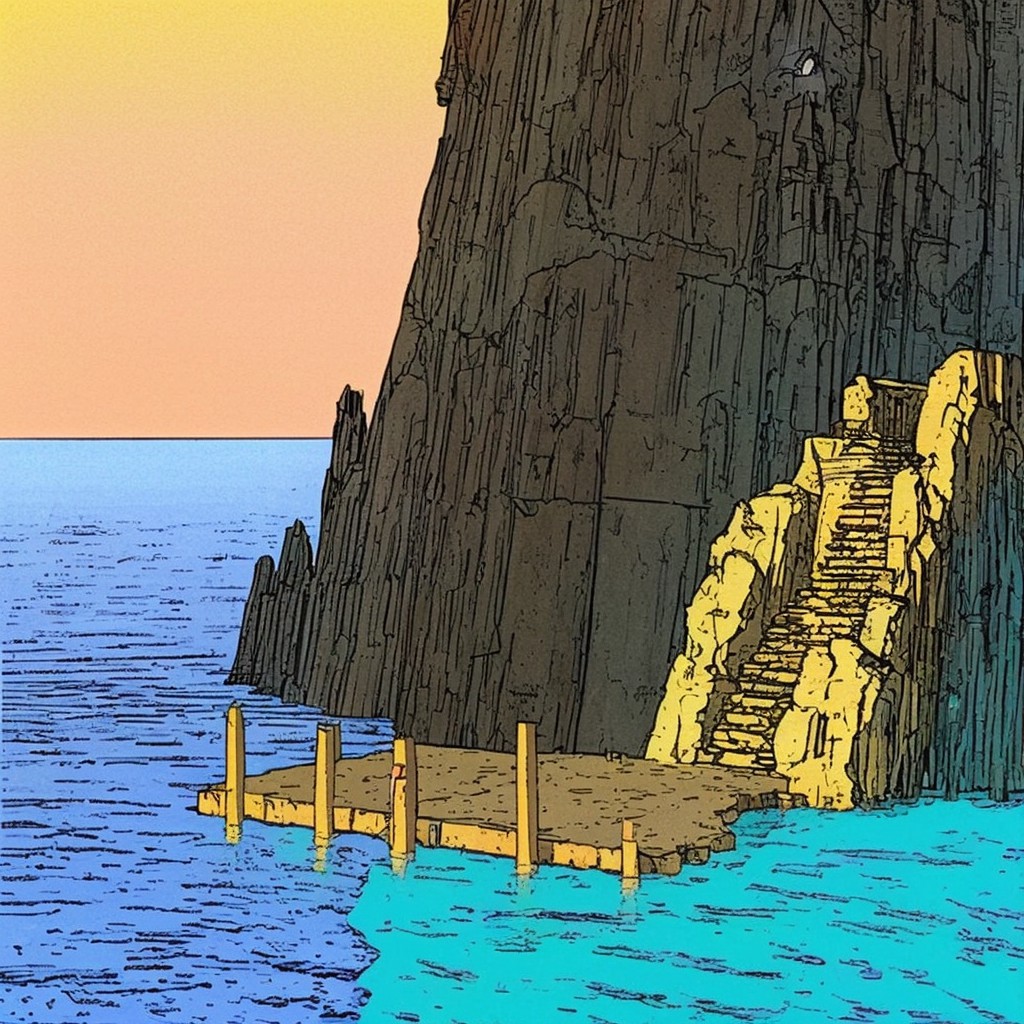

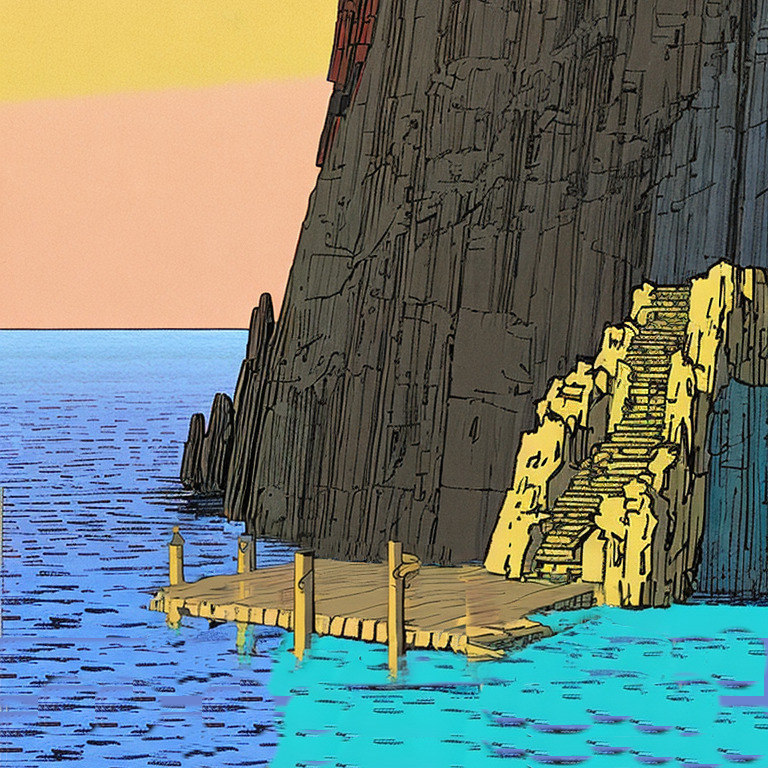

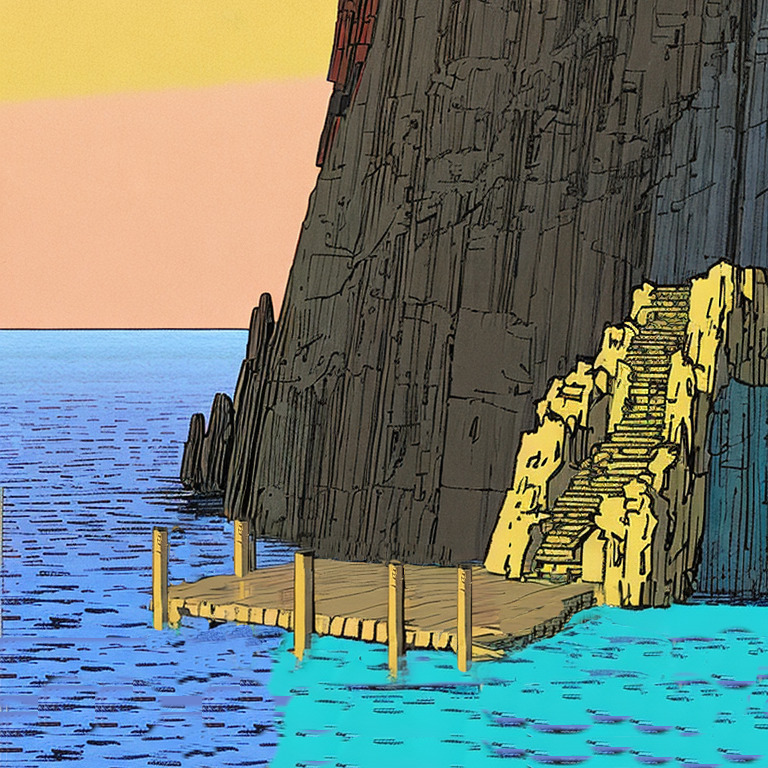

One comment with an improved image: well, it’s a physically plausible object now, if you don’t mind that the dock is now made of stone instead of wood. I wonder why I was unable to get that. The image:

Also, the rightmost post is still sort of fading into the dock at the top. Maybe I was just unlucky? I don’t think the prompt was any better than mine. That gives me hope for the future. Next time a new model comes out, I’ll probably try again.

I really want to make a game along the line of Myst. I have been collecting puzzles for years, and I have almost enough. I am flexible about the visual style. I’ve considered making sets out of lego, or cardboard, or cut paper. And I’ve considered AI-generated art.

I am willing to accept compromises on precision: If the palm tree ends up on the left side instead of the right, that’s OK. It’s even OK if it’s a date palm when I visualized a coconut palm. I might even settle for a willow.

But it’s important that it looks like a place you could be in, and that the objects look like objects that could physically exist. This is why I’m so frustrated with Stable Diffusion. It’s so close to giving me what I want. But it’s not there yet, because it does not comprehend physical objects.

I asked for a dock at the bottom of a cliff. It gave me some stairs at the bottom of a cliff (admittedly, some of the other images had some vaguely dock-like things, but none had the perspective I wanted: almost nobody takes a picture of a dock from the water).

Ok, the stairs look great — I’ll just paint in where I want a dock, and have it clean up that part. (I also rearranged the composition a little to make room).

Here’s what I got:

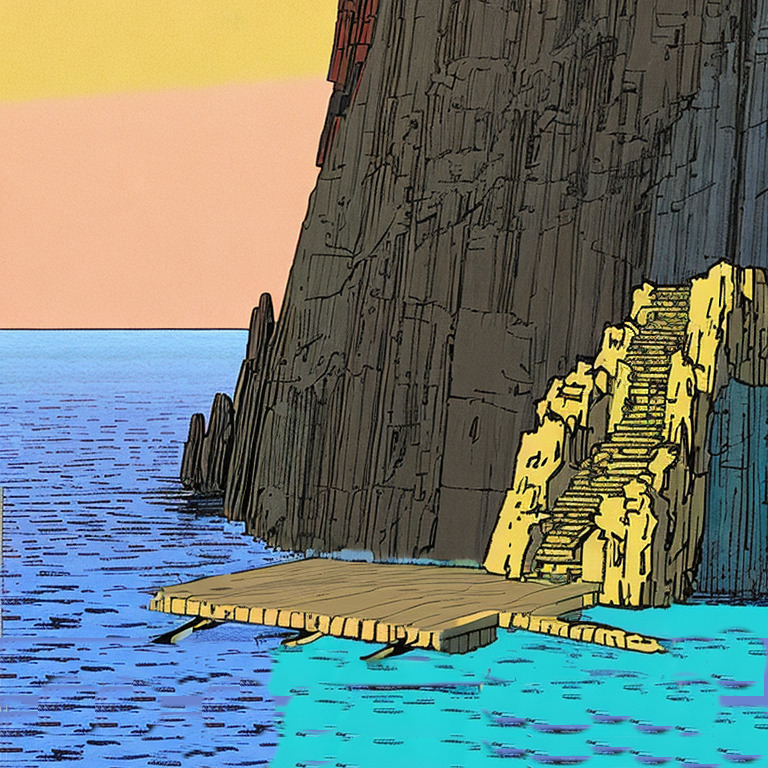

OK, that’s sort of a dock. Like, there’s nowhere to tie up your boat, and it’s got weird protrusions, and it’s somehow below the waterline, but I can work with that. I’ll erase the protrusions, fix the rear, and add some posts.

Then I’ll tell Stable diffusion to just clean up those parts:

Oh look, it decided to put a weird vertical line in the middle of my dock. Why? And also, it keeps wanting to fuck up the right side of the dock. OK, I’ll clean it up with the clone tool:

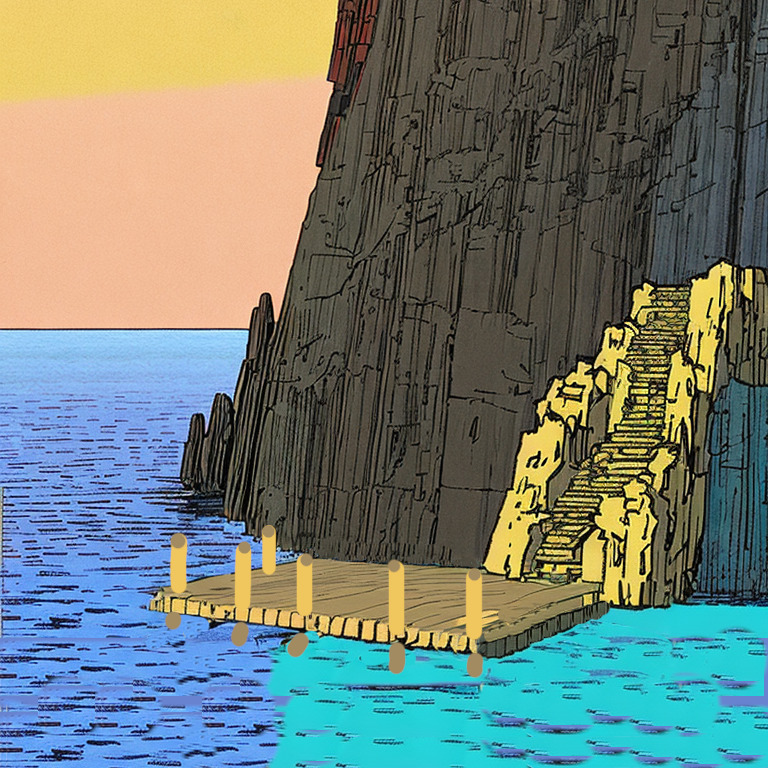

Sure, we lost a post, but at this point, I don’t care. Stable diffusion, please clean up the clonejunk….

No! You fucked up the right side of my dock again! Don’t you know how solid objects work?

Aargh, I give up. I’ll just hire Mœbius to do the artwork for my game. What’s that you say? He’s dead? Noooooo!

(Yes, I could pay an artist to make art for the game. But I’m not yet good enough at game marketing for this to make sense: The original, 1990s Myst had 2,500 images, which would cost me more than I expect to make this year. Also, it would take an absurd amount of time. So I won’t do that … yet.)

The most important thing is that Surfwords now has a demo available.

I didn’t start out with a demo, because I was worried that it would cannibalize my sales. I am not worried about that anymore, because to a first approximation, I have had no sales. Surfwords briefly reached #7 on the iOS paid word game chart. This is hilarious, because I’ve sold less than 20 copies of Surfwords on iOS (and less on all other platforms put together).

To me, even before I wrote it, it was obvious that Surfwords is the sort of game that I would want to play. I like very hard word games. I know that Super Hexagon is good. Ghost is fun (if you’re stuck in a car and bored of Botticelli). The combination is just obviously going to be fun.

So why haven’t I sold a zillion copies? Or even enough copies to pay the bills? I don’t know, but I’m going to see if having a demo helps.

And then I’m going to go back to working on my next game.